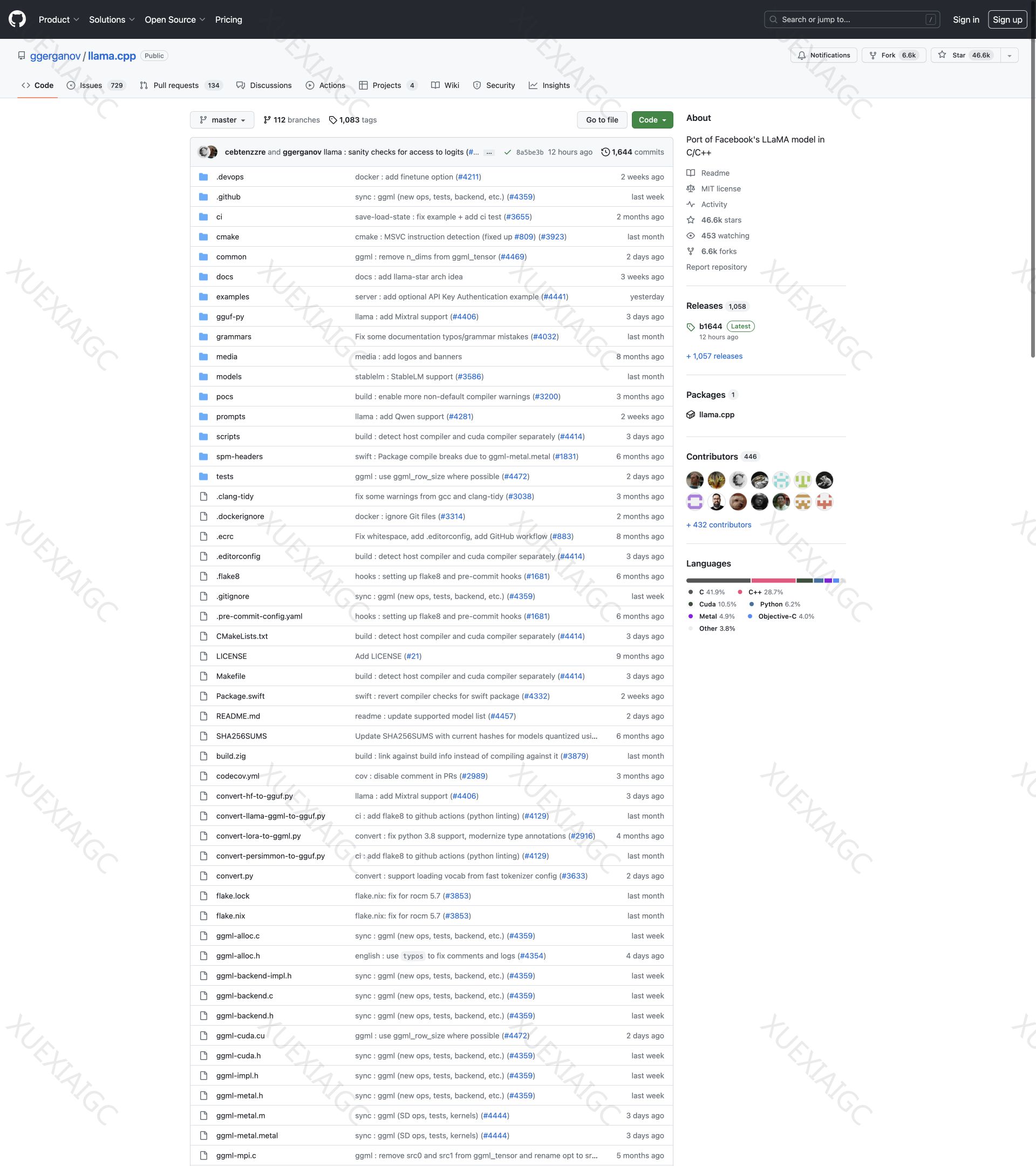

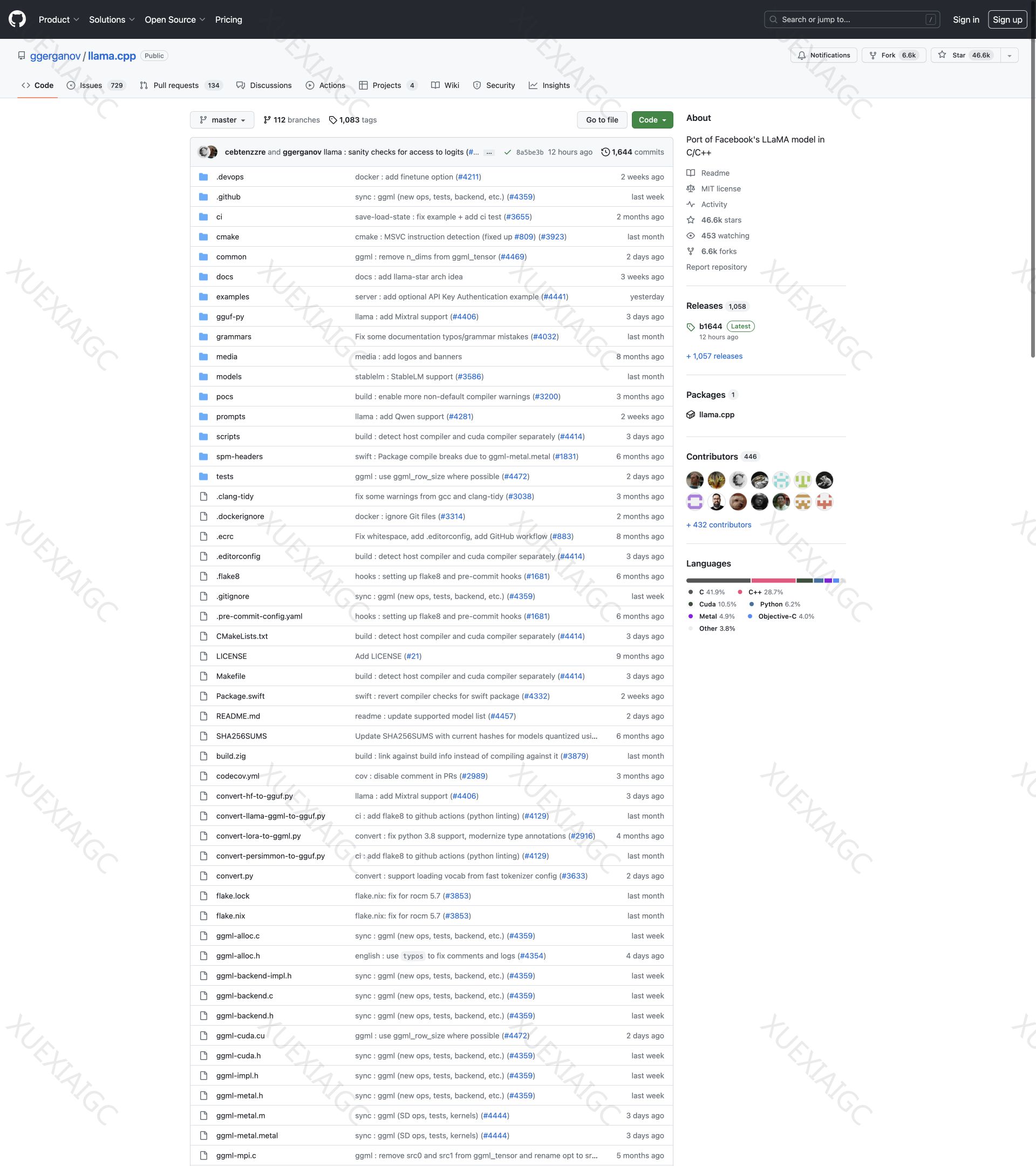

Inference of LLaMA model in pure C/C++, The main goal of llama.cpp is to run the LLaMA model using 4-bit integer quantization on a MacBook.

数据统计

相关导航

暂无评论...

Inference of LLaMA model in pure C/C++, The main goal of llama.cpp is to run the LLaMA model using 4-bit integer quantization on a MacBook.